The first four articles in this series are a quick guide to getting started with audio and MIDI on Raspberry Pi 2:

- Get started with Raspbian Jessie and Raspberry Pi 2

- Get started: Linux ALSA and JACK

- Raspberry Pi soft synthesizer: Get started

- USB audio for Raspberry Pi

Although the articles address Raspbian JESSIE, the HOW-TOs should be able to get you started with pretty much any version of Linux.

I showed how to use a simple monophonic soft synthesizer (amsynth) in part 3. Now, it’s time to move on to a multi-timbral synth: FluidSynth. FluidSynth has a graphical front-end, Qsynth, and I’ll demonstrate Qsynth, too. This tutorial assumes that JACK (and/or ALSA) is properly configured. The second and third articles will help you with configuration.

The Web sites for FluidSynth and Qsynth are:

Please visit these sites to learn about the advanced capacilities that are offered by these programs. You can always consult manual pages while you are working:

man fluidsynth

man qsynth

man qjackctl

man aplay

or you can request help directly, e.g., fluidsynth --help.

Installation

Installation is a breeze:

sudo apt-get install fluidsynth

sudo apt-get install qsynth

These commands should automatically download and install the General MIDI SoundFont. The path name for the GM SoundFont is:

/usr/share/sounds/sf2/FluidR3_GM.sf2

If you did not get the GM SoundFont by installing Qsynth or FluidSynth, then enter the command:

sudo apt-get install fluid-soundfont-gm

to install it. If you want a Roland GS-compatible SoundFont, install it with the command:

sudo apt-get install fluid-soundfont-gs

The General MIDI SoundFont file is about 140MBytes and the GS-compatible SoundFont file is about 32MBytes in size.

FluidSynth

Although you’re most likely to use FluidSynth via Qsynth, it’s worth discussing FluidSynth’s unique capabilities first. Some things can be done quite handily from the command line. The number of FluidSynth’s command line options can be overwhelming, so if you skip to Qsynth, that’s understandable.

FluidSynth is a multi-timbral software synthesizer based on SoundFont 2 specifications. It is a command line application program that accepts MIDI input from either a MIDI controller keyboard or a software MIDI sequencer. FluidSynth needs a SoundFont file containing instrument definitions and samples. It plays the incoming notes using the selected SoundFont instruments. FluidSynth supports sixteen MIDI channels (default). It provides chorus and reverb effects.

There are many SoundFonts available for download from the Web. Two of the best known and widely used SoundFonts are:

- FluidR3_GM.sf2: A General MIDI sound set

- FluidR3_GS.sf2: A Roland GS-compatible sound set

The General MIDI sound set is pretty good; don’t let the “General MIDI” label drive you away!

FluidSynth has three main usage modes:

- Interactive command mode.

- One-liner mode. “One-liner” is my name for this mode of operation.

- Server mode.

If you just type fluidsynth on the command line, FluidSynth launches into its interactive mode, i.e., FluidSynth accepts and interpets commands of its own. I won’t go into interactive mode here, but suffice it to say, that you can set parameters, load SoundFont files, etc. using FluidSynth commands. Enter help when you are in interactive mode in order to get information about commands and parameters. Interactive mode is a good way to explore FluidSynth configuration such that you can write out complicated combinations of FluidSynth command line options.

“One-liner mode” (option -i) launches FluidSynth without dropping into its interactive mode. You’re mostly likely to use this mode when launching FluidSynth from a shell script or if you just have a simple job to do from the command line.

One-liner mode means that you need to dive into FluidSynth’s command line options. There are many command line options including:

-C, --chorus: Turn chorus ON or OFF-R, --reverb: Turn reverb ON or OFF-K, --midi-channels: Set the number of MIDI channels-j, --connect-jack-outputs: Connect JACK outputs-F, --fast-render: Render MIDI to an audio file-O, --audio-file-format: Audio file format for fast rendering-r, --sample-rate: Set the sample rate-T, --audio-file-type: Audio file type for fast rendering-i, --no-shell: Don’t run in interative mode-S, --server: Start FluidSynth as a server process

A full list of command line parameters is given in the FluidSynth User Manual.

One-liner mode handles two everyday tasks without a lot of GUI hoopla:

- Play back MIDI given a list of MIDI files on the command line.

- Render a MIDI file to an audio file (fast render).

FluidSynth looks for command line options, followed by a SoundFont file, followed by a list of MIDI files. Enter the following command to play back a MIDI file (“EvilWays.mid” in these examples) through the ALSA audio port such as the 3.5mm stereo jack on the Raspberry Pi 2:

fluidsynth -a alsa -n -i /usr/share/sounds/sf2/FluidR3_GM.sf2 EvilWays.mid

The -a option selects the ALSA audio device, -n suppresses MIDI input, and -i suppresses interactive mode. ALSA should be configured to use the 3.5mm audio jack. (See the second article in this series about ALSA and JACK.)

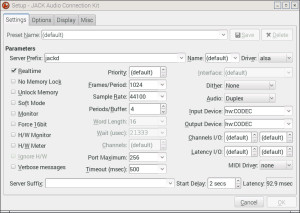

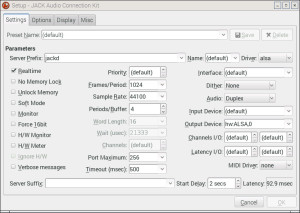

If you prefer to use JACK instead of vanilla ALSA, start the JACK server running via qjackctl. (See the third article in this series about using JACK with a soft synth.) Then, enter the following command:

fluidsynth -a jack -j -n -i /usr/share/sounds/sf2/FluidR3_GM.sf2 EvilWays.mid

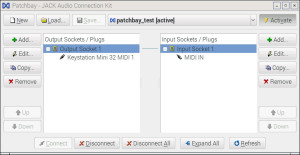

The -a option selects JACK and the -j option tells JACK to connect the audio output of FluidSynth to the system audio output. If you leave out the -j option, JACK will not make the audio connection and you will be left wondering why there isn’t any sound coming from your speakers! You can also make this connection in the qjackctl Connections or Patchbay windows. In practice, if you aren’t getting audio output or MIDI, check your connections in JACK — audio or MIDI connections may be missing.

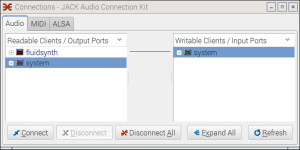

The image below shows the audio connection from FluidSynth to JACK. (Click on the image to enlarge it to full resolution.) This is a snapshot of the qjackctl Connections window while FluidSynth is playing a MIDI file. The audio connection is broken when FluidSynth is done with playback (i.e., when FluidSynth exits).

Fluidsynth provides a way to fast render a MIDI file to a digital audio file. “Fast” is a relatively term. Perhaps “non-realtime render” may be a more accurate description. The following command:

fluidsynth -T wav -F EvilWays.wav /usr/share/sounds/sf2/FluidR3_GM.sf2 EvilWays.mid

converts a MIDI file (“EvilWays.mid”) to a WAV format audio file (“EvilWays.wav”). The -T option specifies the file format and the -F option specifies the name of the output file. The rendering process grinds on for a little while, so please be patient. Once you have the audio file, play it back using the ALSA aplay program:

aplay -D hw:CODEC,0 EvilWays.wav

This example command sends digital audio to the CODEC audio device. Of course, you may use the built-in audio port or some other device. (See part 2 of this series for more examples. These tutorial articles build on each other!)

The way to get a list of audio types (-T) and audio file formats (-O) is confusing. You need to pass “help” to the appropriate command line option. (Grrrrrr.) The command:

fluidsynth -O help

produces the following output on Raspbian JESSIE:

-O options (audio file format): 'double','float','s16','s24','s32','s8','u8' s8, s16, s24, s32: Signed PCM audio of the given number of bits float, double: 32 bit and 64 bit floating point audio

The command:

fluidsynth -T help

produces the output:

-T options (audio file type): 'aiff','au','auto','avr','caf','flac','htk','iff','mat','mpc','oga', 'paf','pvf','raw','rf64','sd2','sds','sf','voc','w64','wav','wve','xi' auto: Determine type from file name extension, defaults to "wav"

Finally, server mode is needed when you want to run FluidSynth as a stand-alone server process. Qsynth is more convenient, so I won’t discuss server mode here just to keep things short.

I have to warn you, working with FluidSynth in either interactive mode or one-liner mode is not always smooth. Feedback is limited and you often have to work through rather cryptic error messages. Qsynth makes life much easier and interesting.

Qsynth

Qsynth is a graphical user interface (GUI) for FluidSynth. Qsynth is based on the Qt framework and toolset for user interface design and implementation.

Qsynth is the way to go if you want to use it as a soft synth with a MIDI controller or sequencer. It pairs up rather nicely with QJackControl, too.

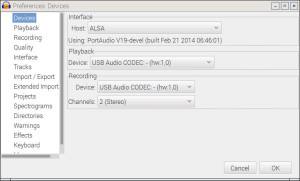

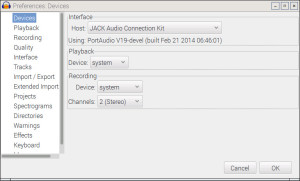

We intend to demonstrate Qsynth using an M-Audio Keystation Mini 32 controller. If you’re working along with me, plug a MIDI keyboard controller into an available Raspberry Pi 2 USB port. Launch qjackctl:

qjackctl &

and start the JACK server by clicking the Start button in the QJackCtl control panel. JACK routes the audio to the selected audio output port. Then, launch qsynth:

qsynth

Qsynth automatically searches for the JACK server and connects audio to it. Qsynth displays a control panel which resembles an old school MIDI module. The panel knobs control master gain and the reverb and chorus effects. There are also buttons to Restart FluidSynth, to stop stuck notes (Panic), to Reset settings and to view/edit MIDI channel settings (Channels).

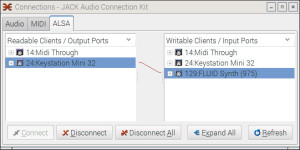

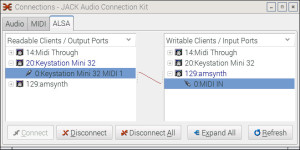

At this point, you need a MIDI connection from the Keystation (or other MIDI controller) to Qsynth. In the demo, I clicked the Connect button on the QJackCtl panel and made the MIDI connection using the Connections window. (See the image below. Click on the image for full resolution.)

Select the Keystation entry on the left and select the FluidSynth entry on the right. Click the Connect button to make the MIDI connection. “FluidSynth” appears as a destination in the right hand column instead of “Qsynth.” Remember, Qsynth is a graphical front-end for a FluidSynth running in the background. The MIDI controller needs to communicate with the soft synth.

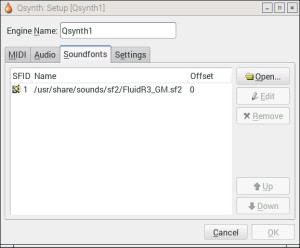

Play a few notes on the MIDI controller to make sure that audio and MIDI are working. Then, click the Setup button on the Qsynth front panel. Qsynth displays its Setup window which has four tabs: MIDI, Audio, Soundfonts and Settings. Click SoundFonts to go to the Soundfonts tab.

The SoundFonts tab displays the SoundFont files that are currently loaded into Qsynth (FluidSynth). Click on the Open button to load a SoundFont file like:

/usr/share/sounds/sf2/FluidR3_GS.sf2

Use the Remove button to unload a SoundFont. Click the OK button when you are finished making changes.

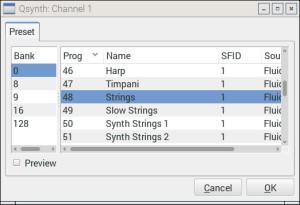

If you start Qsynth with the General MIDI SoundFont and play notes on MIDI channel 1, you hear a grand piano voice. Click the Channels button on the front panel in order to change voices. With the Channels window open, double click on a row in the MIDI channel table. Should you prefer contextual menus instead, right click on a row and select Edit in the pop-up menu. This action gets you to the same place: the channel edit window (below).

The channel edit window displays a list of available SoundFont voices. Voices are organized and selected in the conventional way, namely, banks and individual programs (voices). Choose a different voice like Strings (General MIDI bank 0, program 48). Qsynth does not change the voice until you click the OK button to confirm the change. If you would like to browse and try voices, check the Preview box. When Preview is enabled, Qsynth temporarily changes the voice, letting you plink away on the controller and hear the voice before changing it (or perhaps just leaving things alone by cancelling).

Click the Quit button on the Qsynth front panel when you’re finished. Then, stop the JACK server using the QJackCtl control panel.

That’s all there is to it!

Copyright © 2016 Paul J. Drongowski