Back on the old day job, I developed and tested software and hardware for program profiling. Testing may sound like drudge-work, but there are ways to make things fun!

Two questions arise while testing a profiling infrastructure — software plus hardware:

- Does the hardware accurately count (or sample) performance events for a given specific workload?

- Does the software accurately display the counts or samples?

Clearly, ya need working hardware before you can build working software.

Testing requires a solid, known-good (KG) baseline in order to decide if new test results are correct. Here’s one way to get a KG baseline — a combination of static analysis and measurement:

- Static analysis: Analyze the post-compilation machine code and predict the expected number of instruction retires, cache reads, misses, etc.

- Measurement: Run the code and count performance events.

- Validation: Compare the measured results against the predicted results.

Thereafter, one can compare new measurements taken from the system under test (SUT) and compare against both predicted results and baseline measured results.

Applying this method to performance counter counting mode is straightforward. You might get a little “hair” in the counts due to run-to-run variability, however, the results should be well-within a small measurement error. Performance counter sampling mode is more difficult to assess and one must be sure to collect a statistically significant number of samples within critical workload code in order to have confidence in a result.

One way to make testing fun is to make it a game. I wrotekernel programs that exercised specific hardware events and analyzed the inner test loops. You could call these programs “test kernels.” The kernels are pathologically bad (or good!) code which triggers a large number of specific performance events. It’s kind of a game to write such bad code…

The expected number of performance events is predicted through machine code level complexity analysis known as program “microanalysis.” For example, the inner loops of matrix multiplication are examined and, knowing the matrix sizes, the number of retired instructions, cache reads, branches, etc. are computed in closed form, e.g.,

(38 inner loop instructions) * (1,000,000,000 iterations) +

(26 middle loop instructions) * (1,000,000 iterations) +

(9 outer loop instructions) * (1,000 iterations)

-----------------------------------------------------------

38,026,009,000 retired instructions expected

38,227,831,497 retired instructions measured

This formula is the closed form expression for the retired instruction count within the textbook matrix multiplication kernel. The microanalysis approach worked successfully on Alpha, Itanium, x86, x64 and (now) ARM. [That’s a short list of machines that I’ve worked on. 🙂 ]

With that background in mind, let’s write a program kernel to deliberately cause branch mispredictions and measure branch mispredict events.

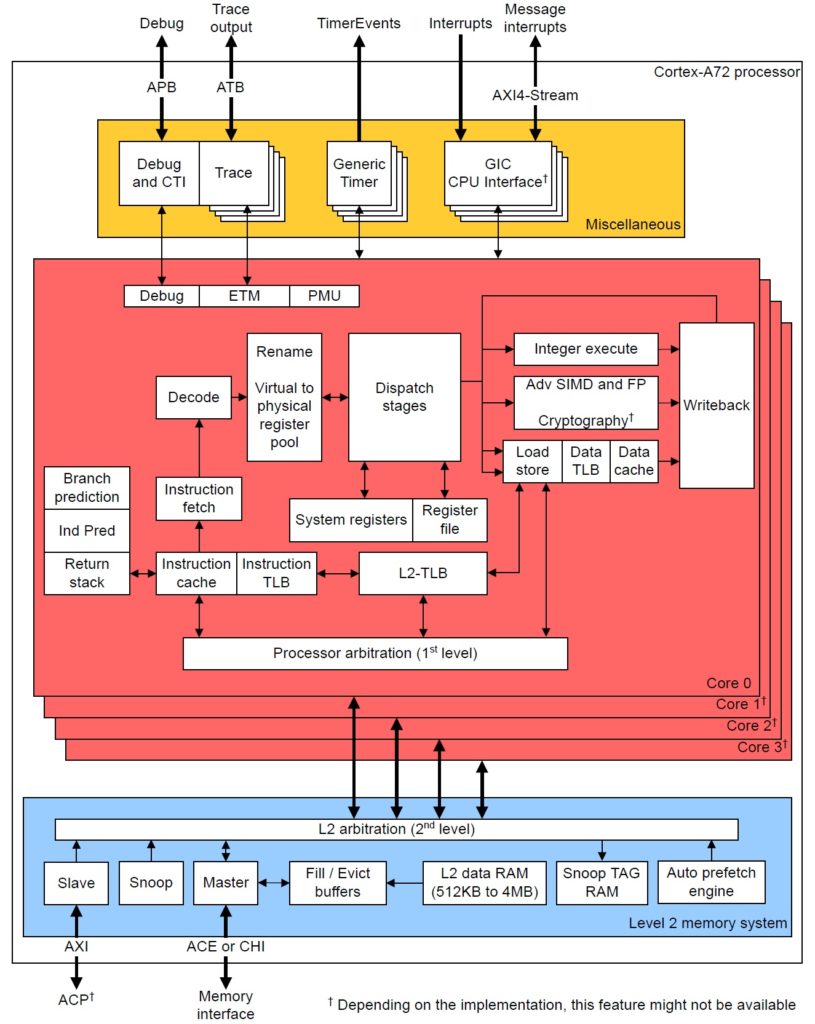

The ARM Cortex-A72 core predicts conditional branch direction in order to aggressively prefetch and dispatch instructions along an anticipated program path before the actual branch direction is known. A branch mispredict event occurs when the core detects a mistaken prediction. Micro-ops on the wrong path must be discarded and the front-end must be steered down the correct program path. The Cortex-A72 mispredict penalty is 15 cycles.

What we need is a program condition that consistently fools the Cortex-A72 branch prediction hardware. Branch predictors try to remember a program’s tendency to take or not take a branch and the predictors are fairly sensitive; even a 49%/51% split between taken and not taken has a beneficial effect on performance. So, we need a program condition which has 50%/50% split with a random pattern of taken and not taken direction.

Here’s the overall approach. We fill a large array with a random pattern of ‘0’ and ‘1’ characters. Then, we walk through the array and count the number of ‘1’ characters. The function initialize_test_array() fills the array with a (pseudo-)random pattern of ones and zeroes:

void initialize_test_array(int size, char* array,

int always_one, int always_zero)

{

register char* r = array ;

int s ;

for (s = size ; s > 0 ; s--) {

if (always_one) {

*r++ = '1' ;

} else if (always_zero) {

*r++ = '0' ;

} else {

*r++ = ((rand() & 0x1) ? '1' : '0') ;

}

}

}

The function has options to fill the array with all ones or all zeroes in case you want to see what happens when the inner conditional branch is well-predicted. BTW, I made the array 20,000,000 characters long. The size is not especially important other than the desire to have a modestly long run time.

The function below, test_loop(), contains the inner condition itself:

int test_loop(int size, char* array)

{

register int count = 0 ;

register char* r = array ;

int s ;

for (s = size ; s > 0 ; s--) {

if (*r++ == '1') count++ ; // Should mispredict!

} return( count ) ;

}

The C compiler translates the test for ‘1’ to a conditional branch instruction. Given an array with random ‘0’ and ‘1’ characters, we should be able to fool the hardware branch predictor. Please note that the compiler generates a conditional branch for the array/loop termination condition, s > 0. This conditional branch should be almost always predicted correctly.

The function run_the_test() runs the test loop:

void run_the_test(int iteration_count, int array_size, char* array)

{

register int rarray_size = array_size ;

register char* rarray = array ;

int i ;

for (i = iteration_count ; i-- ; ) {

test_loop(array_size, array) ;

}

}

It calls test_loop() many times as determined by iteration_count. Redundant iterations aren’t strictly necessary when taking measurements in counting mode. They are needed, however, in sampling mode in order to collect a statistically significant number of performance event samples. I set the iteration count to 200 — enough to get a reasonable run time when sampling.

The test driver code initializes the branch condition array, configures the ARM Cortex-A72 performance counters, starts the counters, runs the test loop, stops the counters and prints the performance event counts:

initialize_test_array(array_size, array, always_one, always_zero) ;

a72MeasureInstructionEvents() ;

peStartCounting() ;

run_the_test(iteration_count, array_size, array) ;

peStopCounting() ;

a72PrintInstructionEvents(stdout) ;

The four counter configuration, control and display functions are part of a small utility module that I wrote. I will explain the utility module in a future post and will publish the code, too.

Finally, here are the measurements when scanning an array holding a random pattern of ‘0’ and ‘1’ characters:

Instructions ret'd: 45,999,735,845

Instructions spec'd: 98,395,483,123

CPU cycles: 59,010,851,259

Branch speculated : 8,012,669,711

Branch mispredicted: 2,001,934,251

Branch predicted 8,012,669,710

Instructions per cycle: 0.780

Retired/spec'd ratio: 0.467

Branches per 1000 (PTI): 174.189

Branch mispredict ratio: 0.250

Please recall that there are two conditional branches in the inner test loop: a conditional branch to detect ‘1’ characters and a conditional branch to check the array/loop termination condition. The loop check should be predicted correctly almost all the time, accounting for 50% of the total number of correctly predicted branches. The character test, however, should be incorrectly predicted 50% of the time. It’s like guessing coin flips — you’ll be right half the time on average. Overall, 25% of branch predictions should be incorrect, and yes, the measured branch mispredict ratio is 0.250 or 25%.

The number of speculated instructions is also very interesting. Cortex-A72 speculated twice as many ARMv8 instructions as it retired. Over half of the speculated instructions did not complete architecturally and were discarded. That’s what happens when a conditional branch is grossly mispredicted!

I hope you enjoyed this simple experiment. It makes the Cortex-A72 fetch and branch prediction behavior come alive. As a follow-up experiment, I suggest trying all-ones or all-zeroes.

Please check out other articles in this series:

- ARM Cortex-A72 tuning: IPC

- Cortex-A72 tuning: Data access

- ARM Cortex-A72 fetch and branch processing

- ARM Cortex-A72 execution and load/store operations

- Raspberry Pi 4 Performance Events

Don’t forget my Performance Events for Linux tutorial and learn to make your own Raspberry Pi 4 (Broadcom BCM2711) performance measurements.

Copyright © 2021 Paul J. Drongowski

ARM Cortex-A72 branch-related performance events:

Number Mnemonic Event name

------ ---------------- -----------------------------------------

0x08 INST_RETIRED Instruction architecturally executed

0x10 BR_MIS_PRED Mispredicted or not predicted branches

0x11 CPU_CYCLES Processor cycles

0x12 BR_PRED Predictable branch speculatively executed

0x1B INST_SPEC Operation speculatively executed

0x76 PC_WRITE_SPEC Software change of the PC (speculative)

0x78 BR_IMMED_SPEC Immediate branch (speculative)

0x79 BR_RETURN_SPEC Procedure return (speculative)

0x7A BR_INDIRECT_SPEC Indirect branch (speculative)

Disassembled code for test_loop():

00010678 :

10678: e92d0830 push {r4, r5, fp}

1067c: e28db008 add fp, sp, #8

10680: e24dd014 sub sp, sp, #20

10684: e50b0018 str r0, [fp, #-24] ; 0xffffffe8

10688: e50b101c str r1, [fp, #-28] ; 0xffffffe4

1068c: e3a04000 mov r4, #0

10690: e51b501c ldr r5, [fp, #-28] ; 0xffffffe4

10694: e51b3018 ldr r3, [fp, #-24] ; 0xffffffe8

10698: e50b3010 str r3, [fp, #-16]

1069c: ea000008 b 106c4

106a0: e1a03005 mov r3, r5

106a4: e2835001 add r5, r3, #1

106a8: e5d33000 ldrb r3, [r3]

106ac: e3530031 cmp r3, #49 ; 0x31

106b0: 1a000000 bne 106b8 ; Should mispredict!

106b4: e2844001 add r4, r4, #1

106b8: e51b3010 ldr r3, [fp, #-16]

106bc: e2433001 sub r3, r3, #1

106c0: e50b3010 str r3, [fp, #-16]

106c4: e51b3010 ldr r3, [fp, #-16]

106c8: e3530000 cmp r3, #0

106cc: cafffff3 bgt 106a0 ; Correctly predicted

106d0: e1a03004 mov r3, r4

106d4: e1a00003 mov r0, r3

106d8: e24bd008 sub sp, fp, #8

106dc: e8bd0830 pop {r4, r5, fp}

106e0: e12fff1e bx lr