In my discussion about instructions per cycle as a performance metric, I compared the textbook implementation of matrix multiplication against the loop next interchange version. The textbook program ran slower (28.6 seconds) than the interchange version (19.6 seconds). The interchange program executes 2.053 instructions per cycle (IPC) while the textbook version has a less than stunning 0.909 IPC.

Let’s see why this is the case.

Like many other array-oriented scientific computations, matrix multiplication is memory bandwidth limited. Matrix multiplication has two incoming data streams — one stream from each of the two operand matrices. There is one outgoing data stream for the matrix product. Thanks to data dependency, the incoming streams are more important than the outgoing matrix product stream. Thus, anything that we can do to speed up the flow of the incoming data streams will improve program performance.

Matrix multiplication is one of the most studied examples due to its simplicity, wide-applicability and familiar mathematics. So, nothing in this note should be much of a surprise! Let’s pretend, for a moment, that we don’t know the final outcome to our analysis.

I measured retired instructions, CPU cycles and level 1 data (L1D) cache and level 2 (L2) cache read events:

Event Textbook Interchange

----------------------- -------------- --------------

Retired instructions 38,227,831,497 60,210,830,509

CPU cycles 42,068,324,320 29,279,037,884

Instructions per cycle 0.909 2.056

L1 D-cache reads 15,070,922,957 19,094,920,483

L1 D-cache misses 1,096,278,643 9,576,935

L2 cache reads 1,896,007,792 264,923,412

L2 cache read misses 124,888,097 125,524,763

There is one big take-away here. The textbook program misses in the data cache far more often than interchange. The textbook L1D cache miss ratio is 0.073 (7.3%) while the interchange cache miss ratio is 0.001 (0.1%). As a consequence, the textbook program reads the slower level 2 (L2) cache more often to find necessary data.

If you noticed slightly different counts for the same event, good eye! The counts are from different runs. It’s normal to have small variations from run to run due to measurement error, unintended interference from system interrupts, etc. Results are largely consistent across runs.

The behavioral differences come down to the memory access pattern in each program. In C language, two dimensional arrays are arranged in row-major order. The textbook program touches one operand matrix in row-major order and touches the other operand matrix in column-major order. The interchange program touches both operand arrays in row-major order. Thanks to row-major order’s sequential memory access, the interchange program finds its data in level 1 data (L1D) cache more often than the textbook implementation.

There is another micro-architecture aspect to this situation, too. Here are the performance event counts for translation look-aside buffer (TLB) behavior:

Event Textbook Interchange

----------------------- -------------- --------------

Retired instructions 38,227,830,517 60,210,830,503

L1 D-cache reads 15,070,845,178 19,094,937,273

L1 DTLB miss 1,001,149,440 17,556

L1 DTLB miss LD 1,000,143,621 10,854

L1 DTLB miss ST 1,005,819 6,702

Due to the chosen matrix dimensions, the textbook program makes long strides through one of the operand matrices, again, due to the column-major order data access pattern. The stride is big enough to touch different memory pages, thereby causing level 1 data TLB (DTLB) misses. The textbook program has a 0.066 (6.6%) DTLB miss ratio. The miss ratio is near zero for the interchange version.

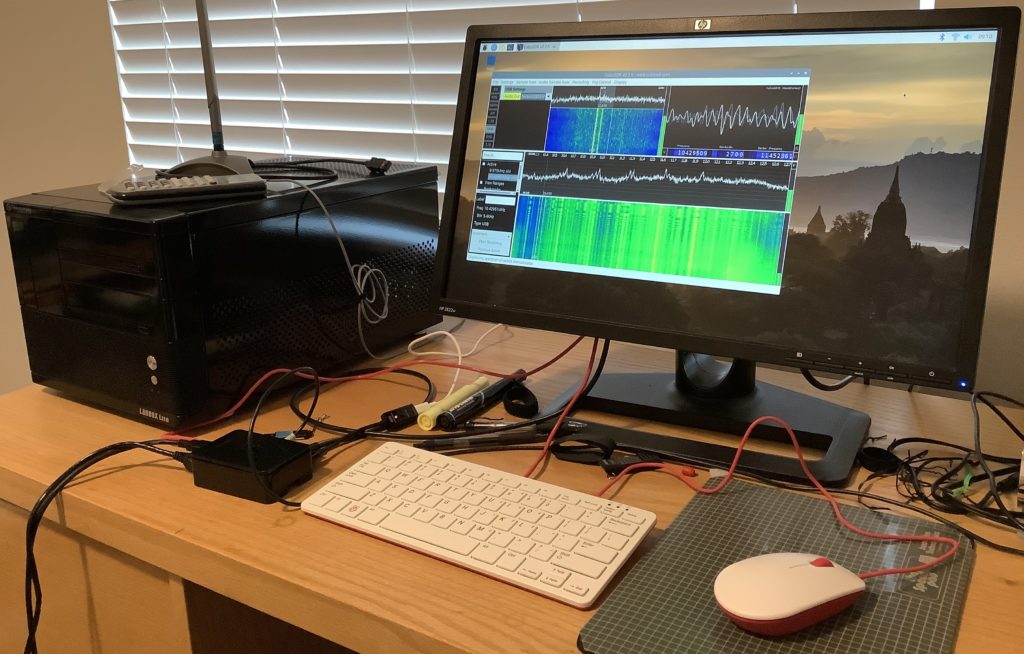

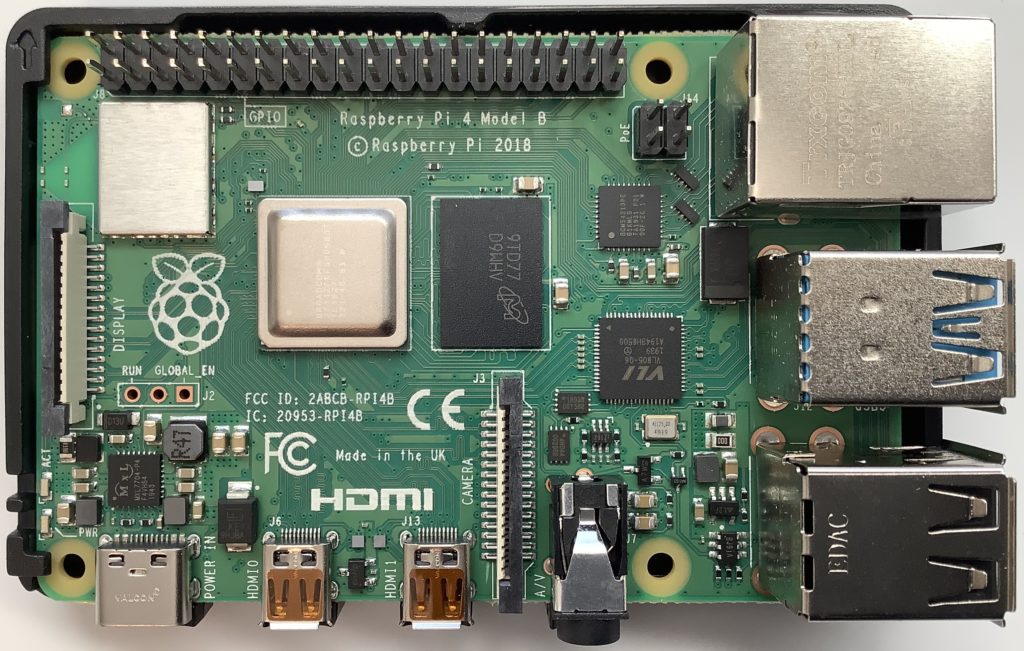

I hope this discussion motivates the importance of cache- and TLB-friendly algorithms and code. Please see the following articles if you need to brush up on ARM Cortex-A72 micro-architecture and performance events:

- ARM Cortex-A72 fetch and branch processing

- ARM Cortex-A72 execution and load/store operations

- Raspberry Pi 4 Performance Events

Check out my Performance Events for Linux tutorial and learn to make your own Raspberry Pi 4 (Broadcom BCM2711) performance measurements.

Here is a list of the ARM Cortex-A72 performance events that are most useful for measuring memory access (load, store and fetch) behavior. Please see the ARM Cortex-A72 MPCore Processor Technical Reference Manual (TRM) for the complete list of performance events.

Number Mnemonic Name

------ ------------------ ------------------------------------

0x01 L1I_CACHE_REFILL Level 1 instruction cache refill

0x02 L1I_TLB_REFILL Level 1 instruction TLB refill

0x03 L1D_CACHE_REFILL Level 1 data cache refill

0x04 L1D_CACHE Level 1 data cache access

0x05 L1D_TLB_REFILL Level 1 data TLB refill

0x08 INST_RETIRED Instruction architecturally executed

0x11 CPU_CYCLES Processor cycles

0x13 MEM_ACCESS Data memory access

0x14 L1I_CACHE Level 1 instruction cache access

0x15 L1D_CACHE_WB Level 1 data cache Write-Back

0x16 L2D_CACHE Level 2 data cache access

0x17 L2D_CACHE_REFILL Level 2 data cache refill

0x18 L2D_CACHE_WB Level 2 data cache Write-Back

0x19 BUS_ACCESS Bus access

0x40 L1D_CACHE_LD Level 1 data cache access - Read

0x41 L1D_CACHE_ST Level 1 data cache access - Write

0x42 L1D_CACHE_REFILL_LD L1D cache refill - Read

0x43 L1D_CACHE_REFILL_ST L1D cache refill - Write

0x46 L1D_CACHE_WB_VICTIM L1D cache Write-back - Victim

0x47 L1D_CACHE_WB_CLEAN L1D cache Write-back - Cleaning

0x48 L1D_CACHE_INVAL L1D cache invalidate

0x4C L1D_TLB_REFILL_LD L1D TLB refill - Read

0x4D L1D_TLB_REFILL_ST L1D TLB refill - Write

0x50 L2D_CACHE_LD Level 2 data cache access - Read

0x51 L2D_CACHE_ST Level 2 data cache access - Write

0x52 L2D_CACHE_REFILL_LD L2 data cache refill - Read

0x53 L2D_CACHE_REFILL_ST L2 data cache refill - Write

0x56 L2D_CACHE_WB_VICTIM L2 data cache Write-back - Victim

0x57 L2D_CACHE_WB_CLEAN L2 data cache Write-back - Cleaning

0x58 L2D_CACHE_INVAL L2 data cache invalidate

0x66 MEM_ACCESS_LD Data memory access - Read

0x67 MEM_ACCESS_ST Data memory access - Write

0x68 UNALIGNED_LD_SPEC Unaligned access - Read

0x69 UNALIGNED_ST_SPEC Unaligned access - Write

0x6A UNALIGNED_LDST_SPEC Unaligned access

0x70 LD_SPEC Speculatively executed - Load

0x71 ST_SPEC Speculatively executed - Store

0x72 LDST_SPEC Speculatively executed - Load or store

Copyright © 2021 Paul J. Drongowski