Digging around in the kernel and PERF source code, I got lost in the labyrinth of ARM products. So, I took a little time to learn about ARM’s naming conventions.

ARM have always been good at separating architecture and implementation (code technology). Architecture is what the programmer sees, rather, the behavioral standard which includes the “instruction set architecture” or “ISA.” Architecture goes beyond instruction sets and includes operating system concerns such as virtual memory, interrupts, etc.

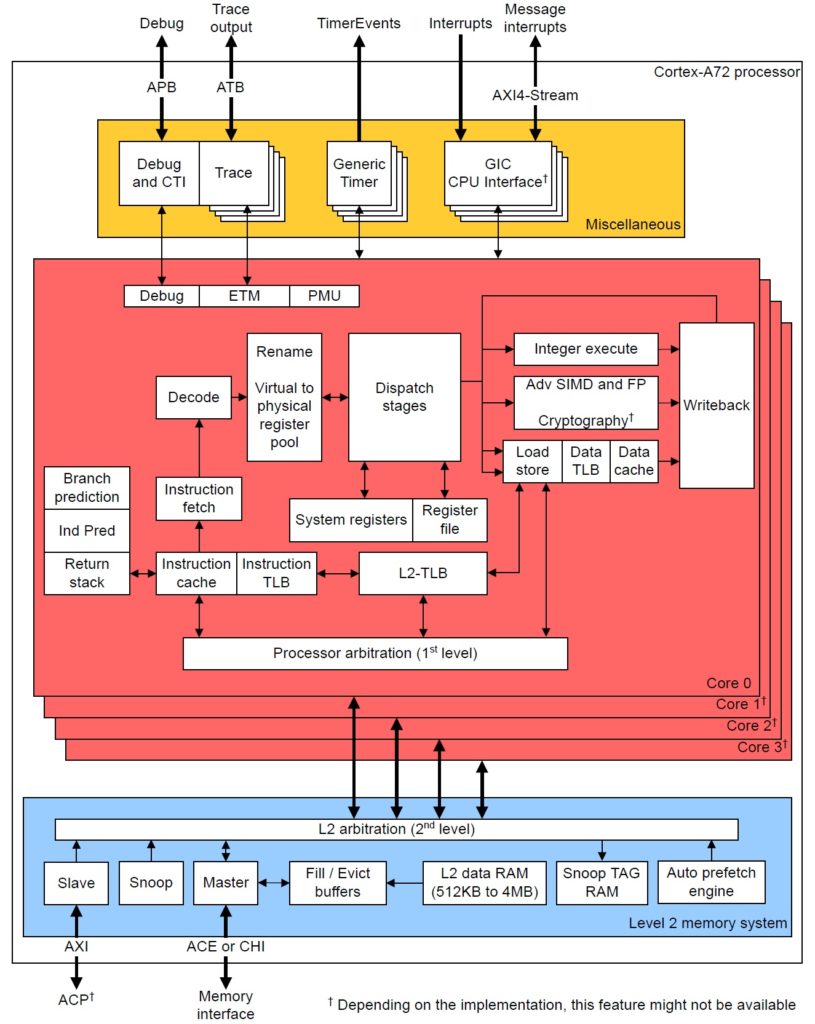

Core technology implements architectural features. Core technology is the underlying machine organization AKA the “micro-architecture.” Depending upon the actual design, Implementation touches on pipeline length and stages, caches, translation look-aside buffers (TLB), branch predictors and all of the other circuit stuff needed for efficient, performant execution.

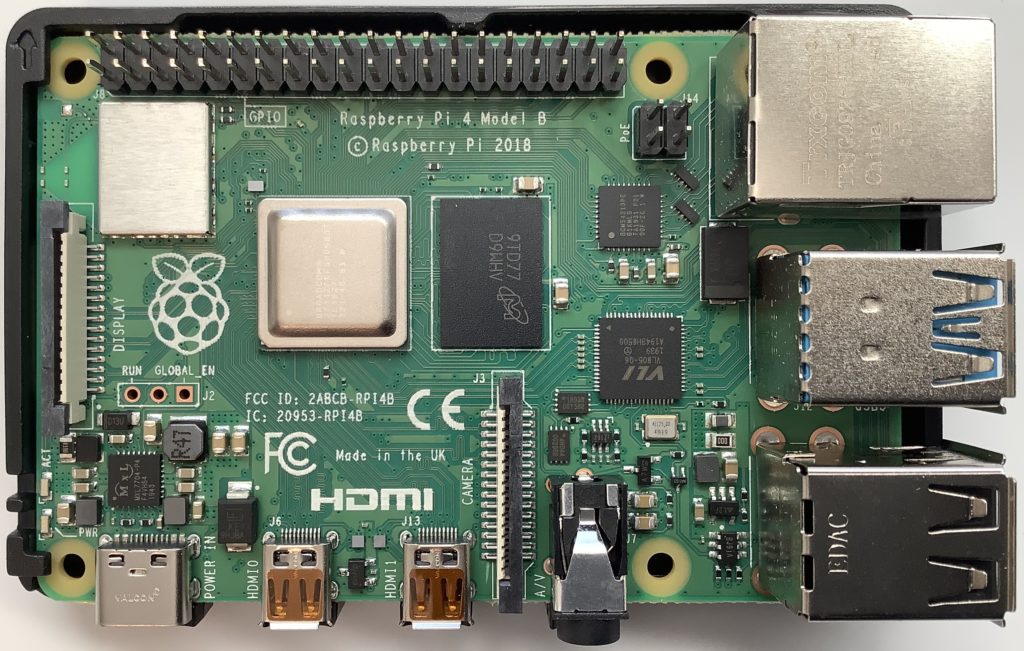

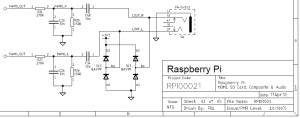

ARM architecture names have the form “ARMvX”, where X is the version number. The original Raspberry Pi (Broadcom2835 with ARM1176JZF-S processor) implemented the ARMv6 architecture. The current Raspberry Pi 4 (Broadcom BCM2711 with quad Cortex-A72 cores) implements the ARMv8-A architecture. ARMv8 is a multi-faceted beast and a short summary is wholly inadequate to convey its full scope. I suggest reading through the ARMv8 architectural profile on the ARM Web site. Suffice it to say here, ARMv8.2 is the latest.

ARMv8 was and is a big deal. ARMv7 was 32-bit only. ARMv8 took the architecture into 64-bit operation while retaining ARMv7 32-bit functionality. ARMv8 added a 64-bit ISA and operating system features, separating operation into the AArch32 execution state and the (then) new AArch64 execution state. It preserves backward compatibility with ARMv7.

You probably noticed that the core name “ARM1176JZF-S” (above) is neither the most informative nor does it suggests this core’s place in the constellation of ARM products. In 2005, ARM introduced a new core technology naming scheme. The naming scheme categorizes cores by series:

- Cortex-A: Application

- Cortex-R: Realtime

- Cortex-M: Embedded

The series letters spell out “ARM” — clever. Cores within a series are tailored for their intended deployment environment having the appropriate mix of performance (speed), real estate (space) and power envelope.

The following table is a partial, historical roadmap of recent ARM cores:

32-bit cores 64-bit cores (ARMx8)

------------ ----------------------------------

Cortex-A5 Cortex-A53 2012 In-order LITTLE

Cortex-A7 Cortex-A57 2012 Out-of-order big

Cortex-A8 Cortex-A72 2015 Out-of-order big

Cortex-A9 Cortex-A73 2016 Out-of-order big

Cortex-A12 Cortex-A55 2017 In-order LITTLE

Cortex-A15 Cortex-A76 2018 Out-of-order big

Cortex-A17 Cortex-A77 2019 Out-of-order big

The 64-bit cores in the second column are a significant architectural break from the 32-bit cores in the first column. I will focus on the ARMv8 (64-bit) cores.

ARM rolled out big.LITTLE multiprocessor configuration at roughly the same time as ARMv8. With big.LITTLE, ARM vendors can design multiprocessors that are a mixture of big cores and LITTLE cores. LITTLE cores are power-efficient implementations much like ARM’s previous core designs for embedded and mobile systems — systems which consume and dissipate as little power as possible. Big cores trade higher power for higher performance. [If you really want to make digital electronics go fast, you must expend energy.] The big.LITTLE approach allows a mix of power-efficient cores and high-performing cores in a multicore system. Thus, a cell phone can spend most of its time in low power cores saving battery while hitting the high power cores when compute performance is required by the user.

The big.LITTLE approach is enabled by common cache and coherent communication bus design. The little guys and the big guys communicate through a common infrastructure. Nice.

ARMv8 LITTLE cores are in-order superscalar designs. In-order designs are simpler than out-of-order superscalar. In-order cores usually have a shorter pipeline, have fewer execution units, and do not require a big register file for renaming and delayed retirement. Out-of-order superscalar designs pull out all of the stops for performance and exploit as much instruction level parallelism (ILP) as they can discover.

The Cortex-A53 was the first ARMv8 in-order LITTLE core. ARM introduced its successor, Cortex-A55, in 2017.

The big core era began with Cortex-A57. This was ARM’s first design that rivaled Intel and AMD out-of-order x86 cores. The Cortex-A72 replaced the A57. Thus, the Raspberry Pi 4 (BCM2711) uses an older ARM big core, the Cortex-A72. [Explains my enthusiasm for a $70 o-o-o superscalar.] ARM have churned out new big cores on an annual basis ever since from its Austin and Sophia design centers.

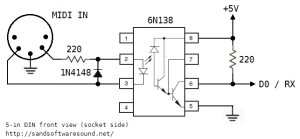

Before pushing ahead, it’s worth mentioning that the Yamaha Montage synthesizer has an 800MHz Texas Instruments Sitara ARM Cortex-A8 single core processor and a 40MHz Fujitsu MB9AF141NA with an ARM Cortex-M3 core. The four 64-bit A72 cores in the Raspberry Pi 4 have much more compute throughput than the single 32-bit A8 core in the Yamaha Montage. The Montage (MODX) processor provides user interface and control and is not really a compute engine. The SWP70 silicon is the tone generator.

In practice

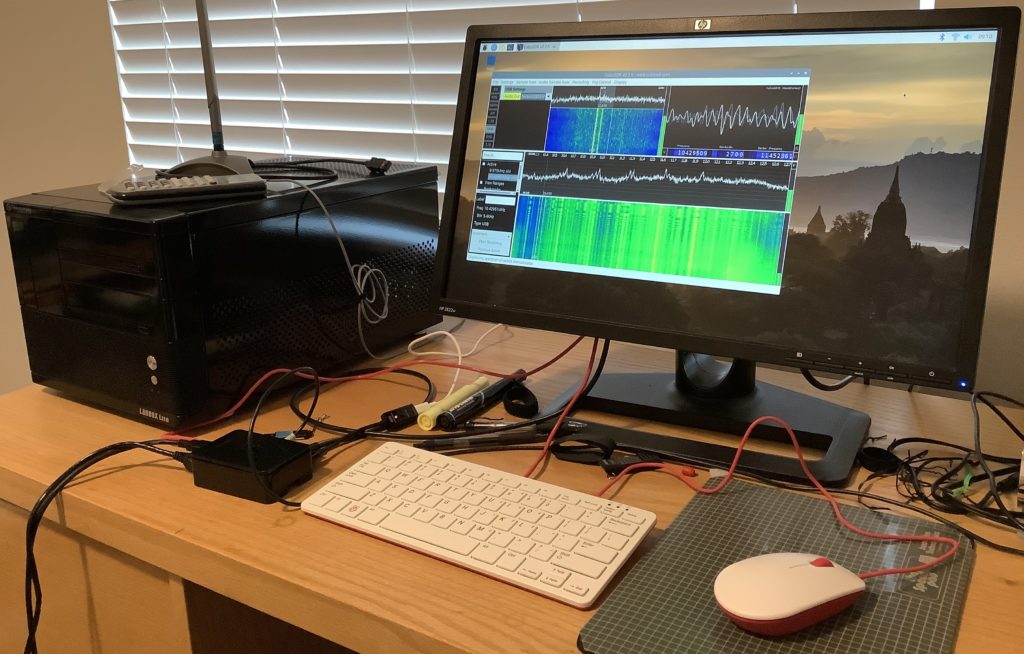

I began this dive into naming and ARM cores when I needed to determine the actual ARM core support installed with Performance Events for Linux (PERF) and the underlying kernel.

The kernel creates system files which let a program query the characteristics of the hardware platform. You might be familiar with the /proc directory, for example. There are a few such directories associated with the kernel’s performance counter interface. (See the man page for perf_event_open().) The directory:

/sys/bus/event_source/devices/XXX/events

lists the performance events supported by the processor, XXX. In the case of the Raspberry Pi 4, XXX is “armv7_cortex_a15”. I was expecting “armv8_cortex_a72”.

Supported performance events vary from core to core. Sure, there is some commonality (retired instructions, 0x08), but there are differences between cores in the same ancestral lineage! So, one must question which default events are defined with the current version of the Raspberry Pi OS.

I found the symbolic events perf_event_open() events like PERF_COUNT_HW_INSTRUCTIONS to be reasonably sane. However, beware of the branch, TLB and L2 cache events. One must be careful, in any case, since there really isn’t a precise specification for these events and actual hardware events have many nuances and subtleties which are rarely documented. [I’ve been there.] The perf_event_open() built-in symbolic events depend upon common understanding, which is the surest path to miscommunication and misinterpetation!

Copyright © 2020 Paul J. Drongowski